Beyond the Network

This post the third in a series: To read them in order, start here.

Imagine for a moment that if instead of treating each server (or container, or vm) as if it is one or more network hops away, that the server was directly attached to the services and other servers that it needs to interact with most. This would increase efficiency and would solve quite a few consistency and consensus issues that exist today when dealing with modern infrastructure. If each network switch and router is already a specialized computer, why not further empower these devices to solve many of the problems we struggle with in infrastructure today? We had quite a few specialized network appliances such as load balancers, firewalls, and storage appliances that emerged in the late 90’s and early 00’s. These follow a path of mixing the network with servers providing some specialized function or functions.

The demands for the ever faster evolution of infrastructure capabilities and scale, and the fact that the network is treated as a dumb pipe or simply plumbing between nodes all contribute to the friction of trying to have a dynamic infrastructure. This dumb pipe approach forces all complexity into the software on server nodes, which makes things using distributed systems and solutions vastly more complex than necessary. Trying to create overlays and abstractions just increases complexity and impairs performance as a tradeoff for flexibility.

If you accept that switches and routers are computers that are purpose built for pushing packets, why not consider adding additional capabilities inside these computers? I know there will be naysayers that think this is a bad idea and there will be cases where some of these things may very well be a bad idea. However, there is definitely a class of problems where this would be a far more elegant solution than what we have now. Simplifying out some complexity and vastly improving performance and scalability seem pretty attractive. A message bus/queue comes to mind as a great example that could start providing incredibly low latencies by being tied into the network device itself.

This approach would also leverage the power of persistent connections, if a server is attached to two other “direct attached switches/network devices” (for redundancy), if either side goes down the other knows. This is an over-simplification that I ask you to overlook for now as I realize there are exceptions/edge cases. Generally when a physical server goes down, the network interface(s) will go down, this would immediately alert any service running on them that this node is down. There could also be a socket or other specialized service running in the switch looking at heartbeat, packet flow, buffers, and communicates with a server side service, maybe via an optimized coordination protocol? This would work well for local clusters, messaging, and similar scenarios. Your cluster needs concensus? No problem if you are only dealing with the concensus service running the switch or router. Even doing cluster concensus across switches and routers would be superior.

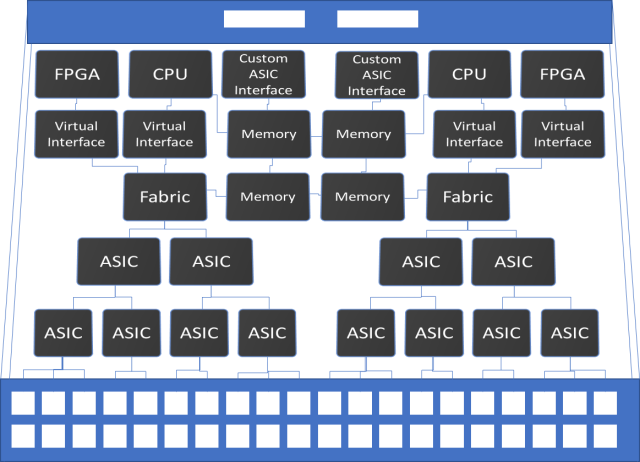

Now think about vendors providing prewritten services available to run on switches and routers that can be connected together with serverless functions. This provides a much more flexible and powerful model of “wiring up” infrastructure. Have a function that needs to be more performant and adding additional instances won’t help? Maybe it should be deployed on an FPGA, or perhaps a special ASIC is added to the switch. I realize that ASICs aren’t as portable/flexible, but perhaps there are common functions where it still makes sense to add ASICs (serialization/deserialization of a protocol for example). Perhaps these FPGAs and ASICs could even be admin insertable via cards or other modular interface wrapped around them? Or perhaps the switch itself is simply more programmable, but adds special function services running on general processors.

There is also an opportunity to enable more sophisticated systems that combine FPGAs, ASICs (TPUs anyone?), GPUs, and CPUs to handle a greater and broader number of problems. These systems would act and be closer to current big iron routers. However, they would be offer a much wider array of capabilities than what is available today. This might be an appropriate place to run a container running a critical piece of OSS infrastructure code for example.

[Above is a Mockup of a Switch with FPGAs, Pluggable ASICs, and CPUs]

I have only scratched the surface of this concept in this post, come back tomorrow to read part 4, where we will explore other possibilities.